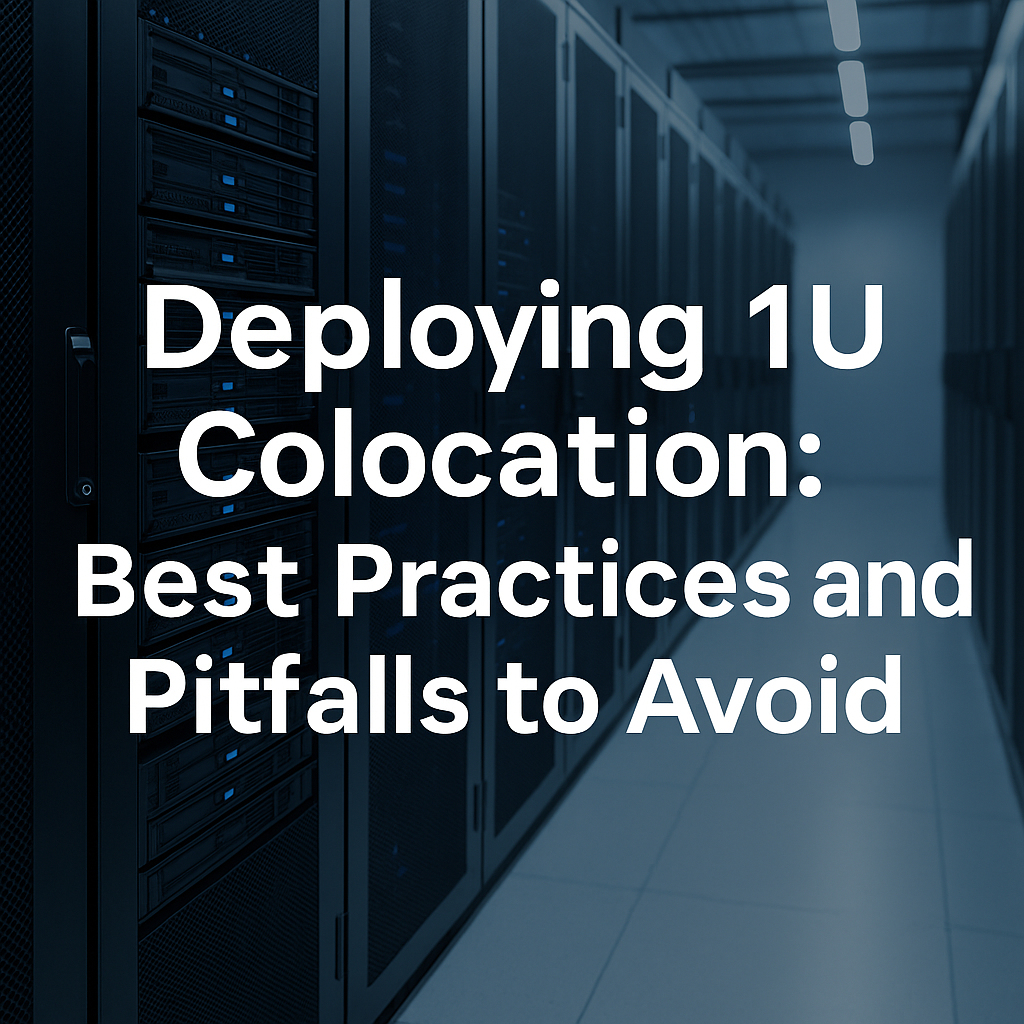

When you first hear about 1U colocation, it sounds simple enough:

“Ship us your 1U server, and we’ll put it in our datacenter rack.”

In theory, that’s it.

In reality, it’s anything but.

If you’ve never deployed equipment in a shared colocation environment before, you’re walking into a maze of mechanical, electrical, and procedural complications that can cost you money, downtime, and sanity.

This guide isn’t marketing. It’s a warning-heavy field manual built from real experience, the hard way.

It’s designed to show you what actually happens when you colocate a single server or router in a datacenter, the traps that aren’t mentioned in sales calls, and how to do it right the first time.

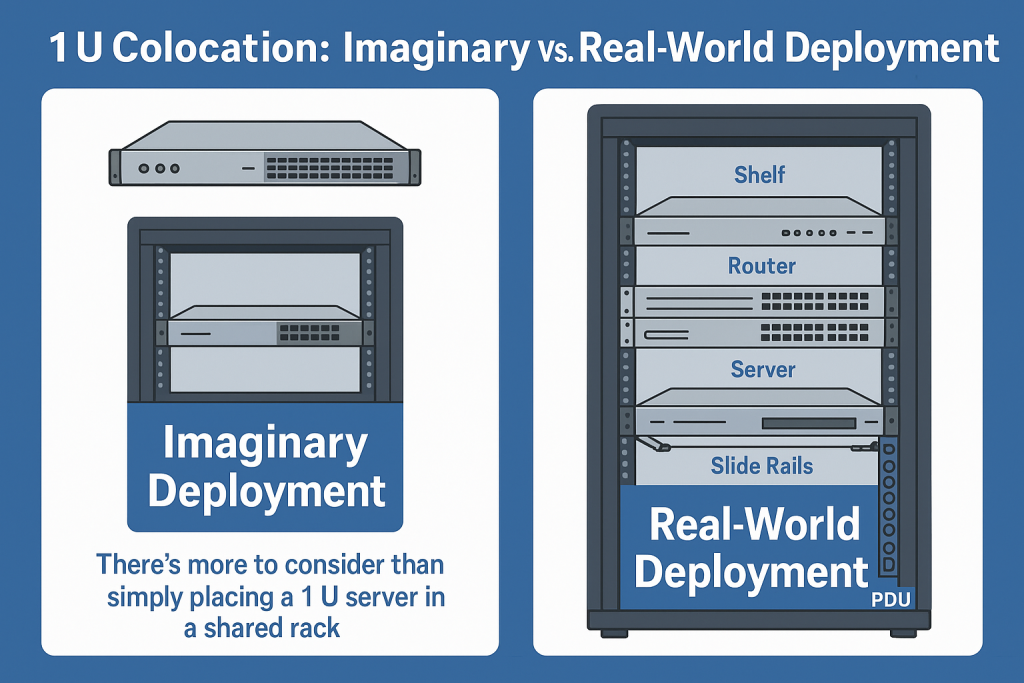

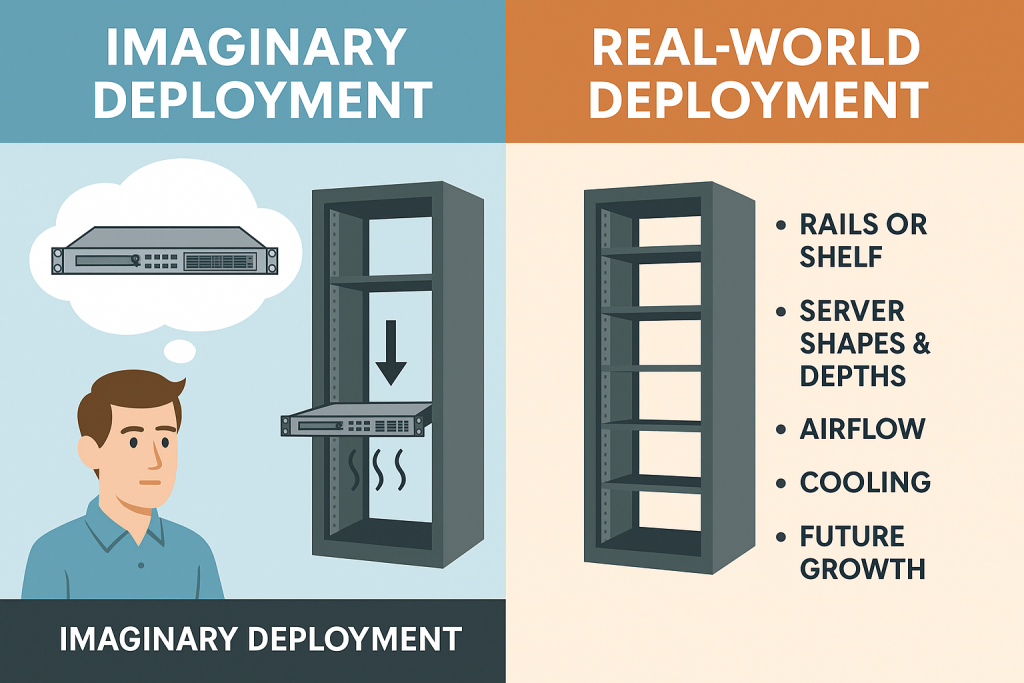

1 · The Myth of “Just Ship a 1U Server”

The dream scenario:

- You buy a sleek 1U server.

- You send it to the datacenter.

- A technician racks it, plugs it in, and you’re live in hours.

The real scenario:

- The rails don’t fit the cabinet.

- The colo has no shelf, or not a heavy-duty one

- The datacenter pauses your install.

- You ship new rails (one or two weeks delay).

- You forgot the password to your IPMI and need KVM

- You sent a plug and transformer that does not fit the data center PDU outlet

- You forgot to include a fiber transceiver or it’s the wrong wavelength

- When installed, your unit overheats due to poor airflow.

- Remote hands spend hours diagnosing — billed at $150–$300/hour.

The fantasy that colocation is plug-and-play is one of the most expensive assumptions in IT.

2 · Physical Deployment Pitfalls

The physical layer is where most first-time colocations fail. It’s not glamorous, but if you get the physical part wrong, everything else falls apart.

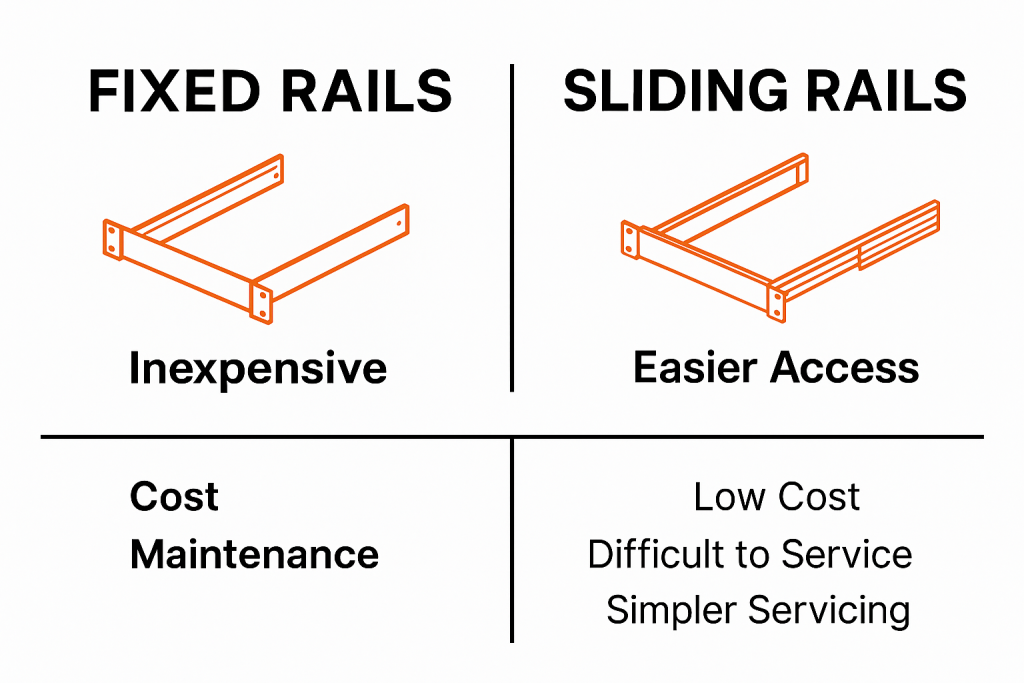

Rails: Fixed vs. Sliding

Fixed rails are cheap. Sliding rails are smart.

Fixed rails attach rigidly; any maintenance requires deracking the entire server. Sliding rails extend like drawers: safer, cleaner, and faster for technicians.

Real story:

A client once saved $50 by buying fixed rails instead of sliding ones. Six months later, a RAM stick failed. The datacenter billed two hours of remote hands time to derack and service the 80 lb 4U colocation server unit. The “savings” cost $350.

Sliding rails pay for themselves the first time something breaks.

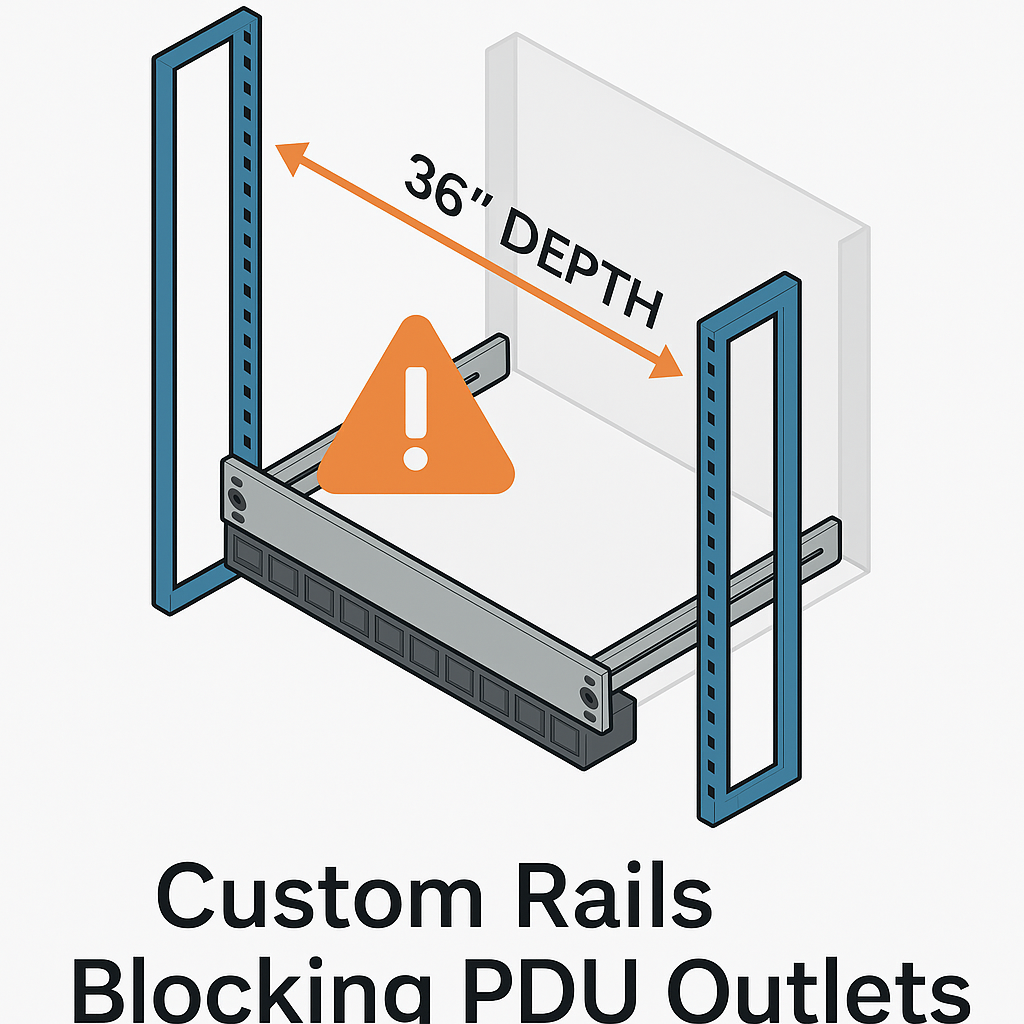

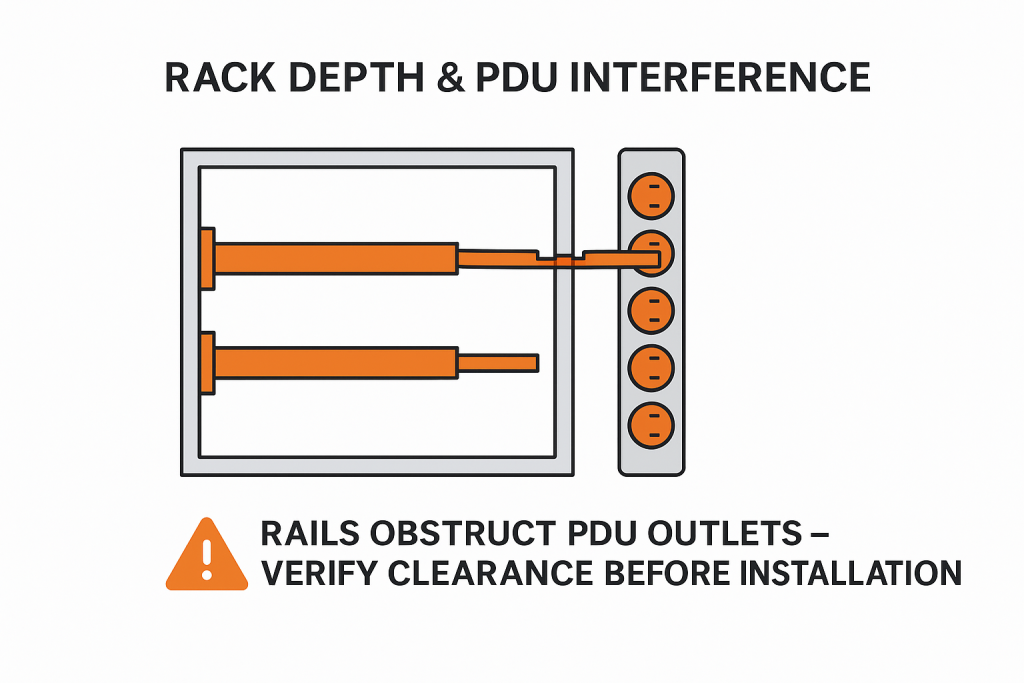

Rack Depth Mismatches

Not all datacenter racks are equal. Some are 30” deep, some 36”, some 42” or more. The rails you bought may only extend 28”.

If your rails can’t reach, they can’t mount.

If they extend too far, they may block the rear PDU outlets, which means the datacenter can’t power or cable your server.

Checklist: Physical Fit Pre-Deployment

- Measure your rails (front to back).

- Confirm datacenter cabinet depth.

- Ask if the rails are adjustable.

- Check that rails don’t block PDU outlets.

- Confirm clearance for cabling and airflow.

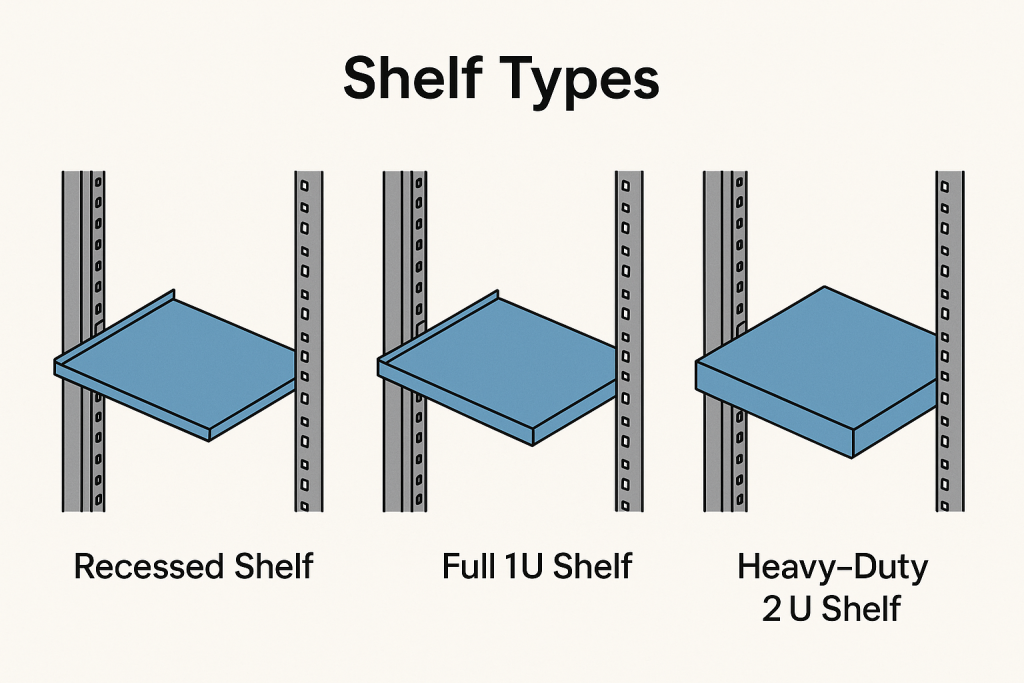

Shelf Problems

If your equipment lacks rails, you’ll need a shelf, but shelves bring their own set of issues.

- Recessed shelves: fit neatly within 1U of vertical space.

- Full 1U shelves: consume a full unit on their own, meaning your “1U” device now costs you 2U in space.

- Heavy-duty shelves: often require non-standard screw positions, overlap with adjacent gear, or take up 2U footprint.

Example:

A firewall shipped without rails. The datacenter added a heavy shelf spanning 2U, then billed for 2U of monthly space. Over 24 months, that shelf cost more than the device it held.

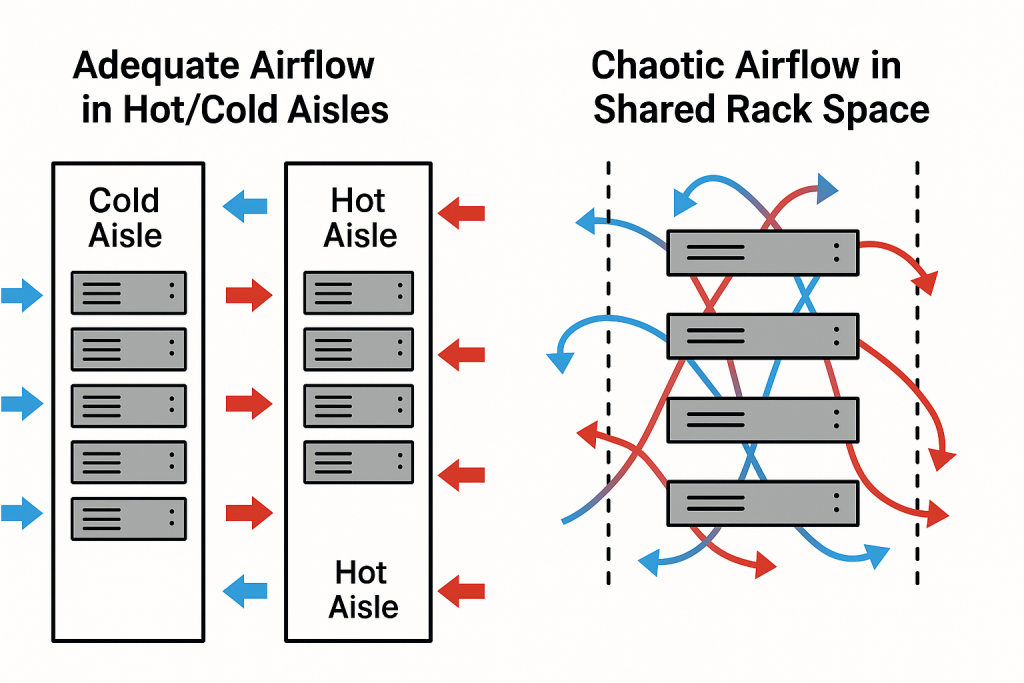

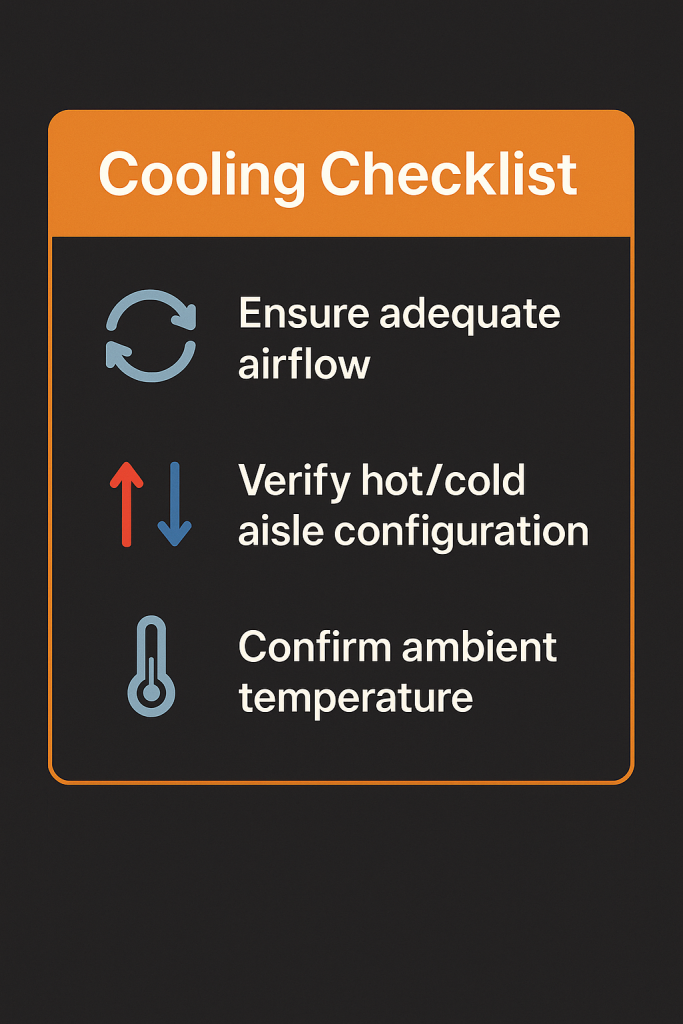

3 · Cooling and Airflow Pitfalls

Most customers assume “the datacenter handles cooling.”

That’s only half-true.

Shared racks mean shared airflow — and airflow patterns can vary drastically from one row to another.

Why 1U Servers Run Hotter

A 1U server is compact, dense, and often running enterprise CPUs, GPUs, or NVMe storage with limited airspace. There’s little margin for heat.

If the rack layout doesn’t give you enough cool intake air or if neighboring gear exhausts heat toward you, your equipment will throttle or fail.

When 2U Makes Sense:

Sometimes it’s smarter to lease 2U of space for a single 1U device. That extra unit gives your equipment breathing room, better airflow, and easier service access.

Checklist: Cooling Considerations

- Ask if the datacenter uses hot/cold aisles.

- Confirm airflow direction (front-to-back, side-to-side).

- Request inlet temperature guarantees.

- Consider reserving 2U for high-power devices.

4 · Legacy Gear in a Modern Colo

Moving on-prem gear into a colocation cabinet rarely works cleanly.

Case Study: The Raritan KVM LCD Monitor + Keyboard Combo

A common office rack accessory, this pull-out console was designed for shallow 24–28” racks.

In a 34–36” colocation cabinet, its rails don’t reach the back posts.

What happens next?

- The KVM sits loose on a shelf.

- Every time you pull the monitor out, the entire unit slides.

- It risks falling or damaging cables.

If it worked fine in your office, that doesn’t mean it’ll survive in a datacenter.

Lesson: Don’t repurpose legacy gear. Buy hardware rated for full-depth colocation racks.

5 · Operational and Cost Pitfalls

“Free” Services That Aren’t

Many datacenters promise “free rack & stack” or “free remote hands.”

That offer expires the moment your deployment isn’t straightforward.

- Rails don’t fit: installation takes longer; you pay hourly labor.

- Shelves needed: monthly shelf rental fee.

- Improper mounting: future maintenance becomes billable.

Anecdote: The $500 Shelf Mistake

A client shipped a server with no rails. The datacenter installed it on a rental shelf at $25/month. Two years later, that shelf cost $600 — more than the proper rail kit would have.

6 · Networking and Growth Complications

Colocation is more than power and space. The network side can trip you up just as badly.

Growth Planning

Some datacenters let you expand by adding more equipment to your existing VLAN. Others force you to rent a quarter or half-cabinet for additional devices.

If you anticipate growth — another router, firewall, or server — ask ahead of time if inter-rack VLAN extensions are supported.

Hybrid Integration

A few modern datacenters can connect colocated gear with their own bare-metal or virtual servers through internal VLANs. Many cannot. Knowing this up front helps avoid expensive migrations later.

Cross-Connects and Cloud Access

Need AWS Direct Connect, Azure ExpressRoute, or carrier access? Some providers only offer cross-connects from private cabinets, not shared racks. You might be forced into a more expensive footprint just to get fiber access.

Checklist: Future Growth Planning

- Ask about inter-rack VLAN capability.

- Verify cross-connect availability for shared racks.

- Check pricing for hybrid environments.

- Plan for future network scalability.

7 · Imaginary vs. Real-World Deployment

| Expectation | Reality |

|---|---|

| “1U fits everywhere.” | Rails don’t reach 36” cabinet; install delayed two weeks. |

| “Cooling is the datacenter’s problem.” | Shared rack airflow overheats neighboring gear. |

| “Free rack & stack = no cost.” | Improper rails → $200/hour remote hands fees. |

| “Shelf solves everything.” | Shelf adds 2U → doubles monthly cost. |

| “On-prem gear is fine.” | Raritan KVM rails too short → unsafe install. |

8 · Best Practices – Lessons Learned the Hard Way

- Communicate before shipping.

Send rail dimensions and airflow details. - Choose sliding rails.

Fixed rails cost more in labor later. - Plan for cooling.

Hot gear needs breathing space. - Avoid legacy on-prem equipment.

It rarely fits or cools correctly. - Discuss network scalability up front.

VLANs and cross-connects are not universal. - Budget for hidden costs.

Remote hands, shelf fees, and derack charges add up.

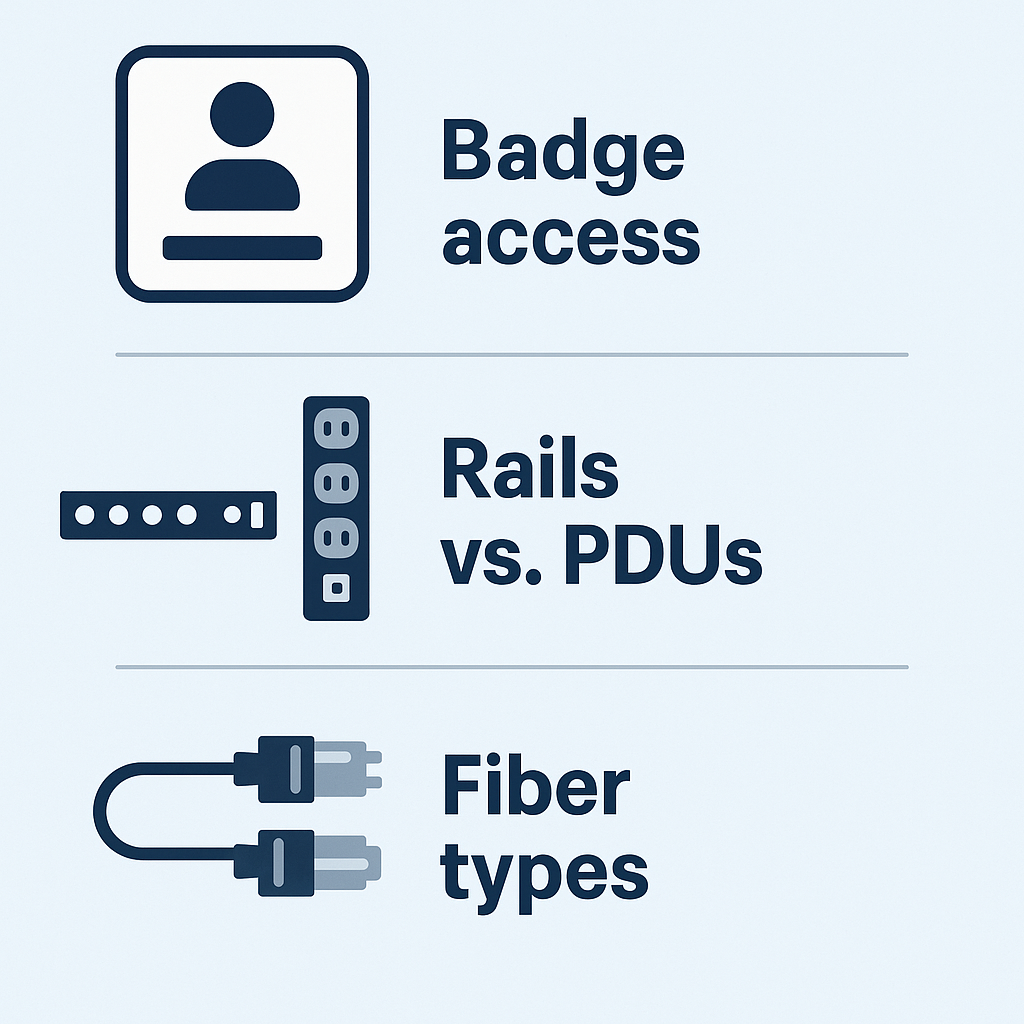

9 · Access, Cabling, and Connectivity Pitfalls

Colocation doesn’t just take away physical ownership — it also limits physical access. Many first-time colocators learn this the hard way.

Restricted Physical Access

Unlike your office or private datacenter, you can’t simply walk in and touch your equipment. Shared racks are controlled access zones.

- You’ll need to request an access badge or temporary visitor pass.

- Visits often require scheduling in advance — sometimes 24 to 48 hours.

- You may be required to have escorted access (a staff member accompanying you).

- Some facilities charge hourly for escorts.

That means if your server goes down at 2 a.m., you can’t just drive over and reboot it. You’ll wait for staff availability or pay emergency remote-hands rates.

Rails Blocking PDUs and Oversized Cabinets

Even in 36” or 42”-depth racks, certain rail designs extend too far backward, blocking rear PDUs. When rails prevent outlet access:

- Datacenter staff can’t plug power cables or network lines properly.

- You may lose usable power ports or need to rent an alternate slot.

- Some facilities will flatly refuse to mount equipment that obstructs their PDUs.

Always confirm clearance between your rail brackets and the rear PDUs.

Fiber vs. Copper Confusion

Connectivity mistakes are among the most frustrating pitfalls because they can delay activation by weeks.

Common issues:

- No transceivers included. You ship a router expecting the datacenter to provide optics — they don’t.

- Wrong type of transceiver. Your SFP+ uses multimode SR optics, but the datacenter’s patch panel is single-mode.

- Mismatched connector standards. You expect LC-LC; they use SC-LC or MPO trunks.

- Fallback to copper. The datacenter can only connect your port with a CAT6 patch because optical interfaces are incompatible, limiting speed to 1 Gbps instead of 10 Gbps.

Checklist: Access and Connectivity Preflight

- Confirm your access process (badge, escort, scheduling).

- Verify cabinet depth and PDU clearance for rails.

- Ask the datacenter what fiber types (SMF/MMF) and connector standards they use.

- Include transceivers with your shipment.

- Label copper vs. fiber ports clearly.

- Test optics in advance with a loopback plug if possible.

10 · Conclusion – Cheap Done Wrong Is Expensive

1U colocation looks simple on paper. In practice, it’s a test of planning and precision.

Every corner you cut — rails, airflow, access, or optics — multiplies your future costs.

The datacenter is not your office.

You don’t control the racks, the aisles, or the schedule.

You’re operating inside someone else’s infrastructure, by their rules.

Do it right, and colocation offers incredible reliability and scalability.

Do it wrong, and it becomes a never-ending source of frustration, delays, and surprise bills.

In short:

Measure twice, ship once, and always talk to your datacenter before you send anything.

and

Choose Metanet for your 1U colocation journey, as you can see we have years of experience, and have a process to sort out all of these complex details before you sign up, so the process runs smoothly.